namR est le leader de l’information immobilière dédiée à la transition environnementale

namR permet de collecter les données extérieures disponibles pour enrichir vos données bâtiments et accélérer la connaissance et la planification écologique de votre parc.

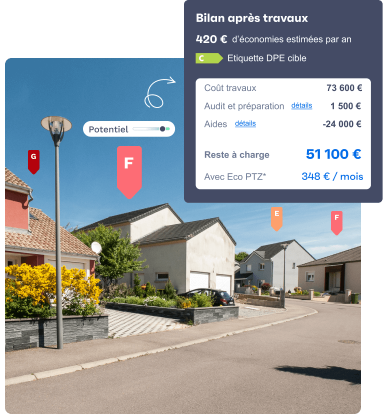

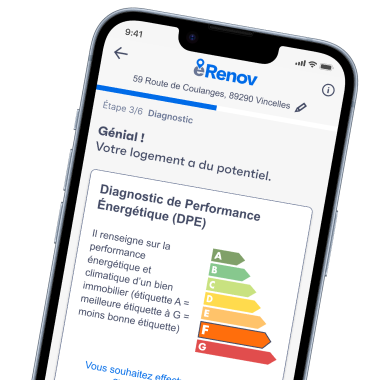

Rénovation énergétique

Priorisez les logements à rénover et engagez plus vite dans l'action !

Énergie solaire

Accélérez le développement de l'énergie solaire !

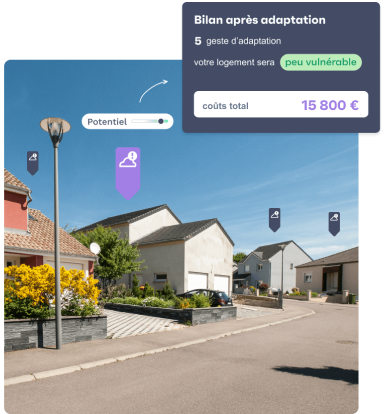

Adaptation climatique

Gagnez en proactivité dans la prévention aux risques climatiques !

-

20 millions de logements à rénover d'ici 2050

Rénovation énergétique -

100 % d’énergies renouvelables d’ici 2050

Énergie solaire -

6 logements sur 10* exposés à un risque majeur d'ici 2050

Adaptation climatique

Décarbonez plus vite votre portefeuille

Les données et le simulateur énergétique de namR ont déjà permis à plus de 6 acteurs bancaires et publics d’obtenir des résultats mesurables (connaissance exhaustive de leur portefeuille, plus de rendez-vous clients,...)

Décarbonez plus vite votre portefeuille

Les données et les simulateurs namR permettent d’aller plus loin dans vos analyses de solarisation en prenant en compte l’exhaustivité des caractéristiques d’une toiture et de son environnement direct.

Dérisquez plus rapidement votre portefeuille

Accélérez votre mission de prévention grâce à nos données qui caractérisent la vulnérabilité de 100% des logements vis-à-vis des risques climatiques.

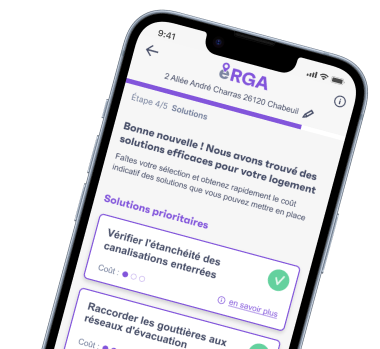

Accélérez votre action et celle de vos clients

Plusieurs banques et assureurs utilisent déjà les données et les simulateurs de namR pour aller plus vite dans la transformation et l’impact de leurs offres.

namR sait déjà tout ce qu'il faut savoir sur les logements

namR a créé une base de données énergétiques et climatiques inédite car géolocalisée et disponible sur 100% des bâtiments résidentiels.

Expertise transformation durable de l'habitat

Grâce à ses experts internes et à son réseau de partenaires, namR a développé une expertise pointue et transverse en transformation écologique de l’habitat

Expertise en analyse d'images

namR exploite le meilleur du big data (open data ou données partenaires) pour décrire des millions de bâtiments, leur morphologie, leur environnement et leur contexte

Expertise en machine learning

namR fabrique des algorithmes pour définir la meilleure solution d’atténuation ou d’adaptation, de façon personnalisée pour chaque habitation.

Maîtrisez vos trajectoires, engagez vos clients

Les solutions IA de namR répondent à tous vos enjeux liés aux risques de transition et risques physiques de vos portefeuilles immobiliers résidentiels.

-

Caractérisation portefeuille

-

Scoring de risques

-

Reporting ESG

-

Ajustement du pricing

-

Ciblage marketing

-

Aide à la vente

-

Proximité client

-

Sensibilisation accélérée

"Les simulateurs en ligne sont très mono travaux ou trop complexes; namR répond à notre enjeu d'avoir un simulateur court et facile à remplir"

Découvrez dès maintenant la puissance de notre IA

Les simulateurs namR ont déjà été adoptés par tous les établissements bancaires de premier rang et utilisés par + 100 000 utilisateurs